background

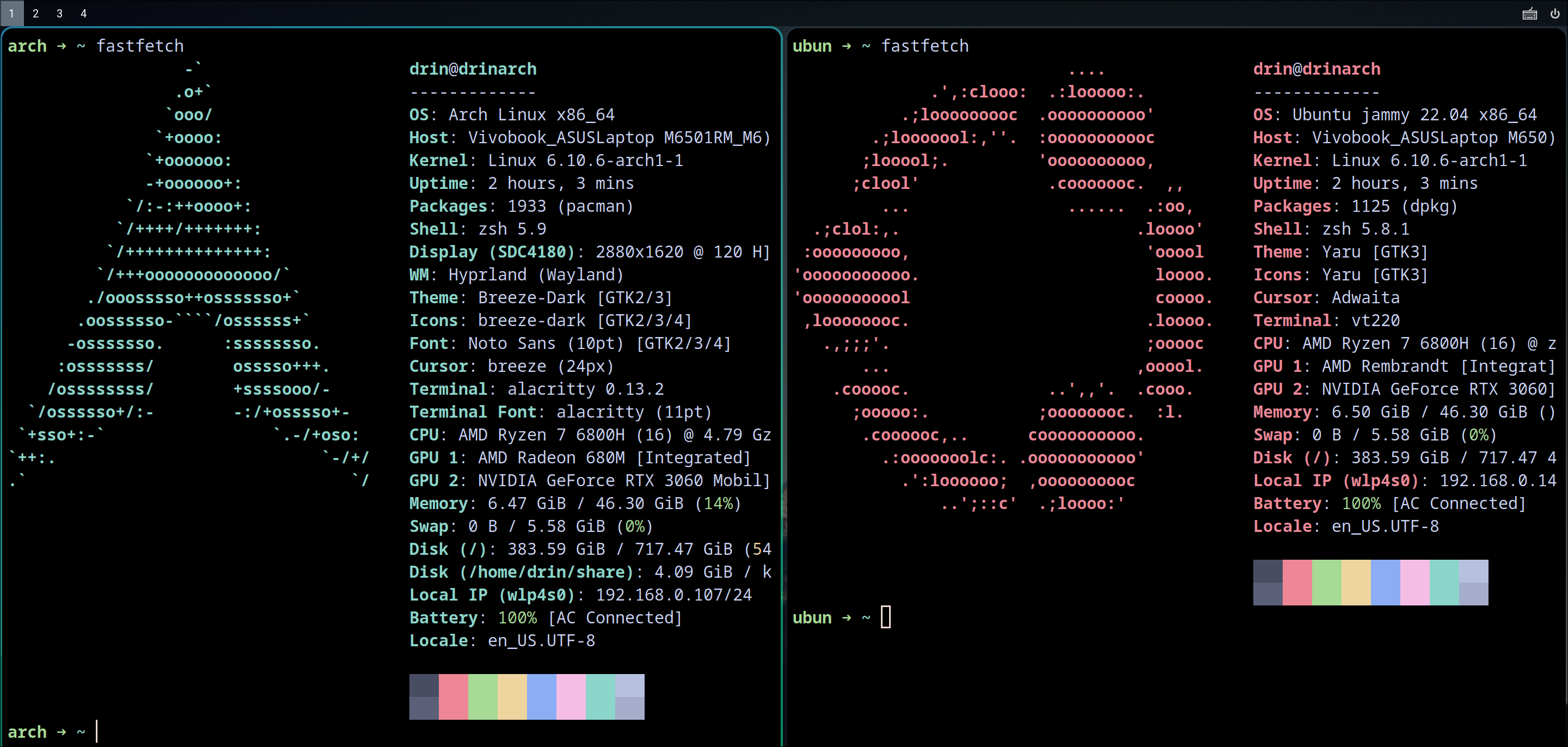

I am an Arch user motivated by its super active community. However, many stuff in academy or industry extremely those about Robotics are designed for the Ubuntu. The ROS AUR package is out-of-date. It is difficult to maintain a package with so many dependencies. Furthermore, many libraries about pointcloud processing and navigation are developed under ubuntu, which means developers of those libs only maintain for Ubuntu usually.

create Ubuntu environment

- debootstrap

paru -S debootstrap- To manage the container with

machinectl, create the Ubuntu environment in the/var/lib/machines/with root permission.

debootstrap --include=dbus,systemd-container --components=main,universe,multiverse jammy jammy https://mirrors.tuna.tsinghua.edu.cn/ubuntu- Enter the container with

systemd-nspawn -D jammy - The network of Ubuntu might be broken now.

- configure systemd-resolved. Edit the

/etc/systemd/resolved.confDNS lineDNS=8.8.8.8 - (optionally) configure DNS manually

sudo systemctl disable --now systemd-resolved echo 'nameserver 8.8.8.8' > /etc/resolv.conf - configure systemd-resolved. Edit the

At first, I tried podman(docker) for a period of time. I think they are so heavy. I don’t care about the image, I just require a container that can run Ubuntu and the faster the better. I don’t mind the dirty directories. That’s why I choose systemd-nspawn in the end

passthrough Nvidia GPU

Another requirement is the discrete Nvidia gpu on my laptop, some of the development using CUDA. ArchWiki Systemd-nspawn#Nvidia_GPUs Create file /etc/systemd/system/systemd-nspawn@.service.d/nvidia-gpu.conf

[Service]

ExecStart=

ExecStart=systemd-nspawn --quiet --keep-unit --boot --link-journal=try-guest --machine=%i \

--bind=/dev/dri \

--bind=/dev/shm \

--bind=/dev/nvidia0 \

--bind=/dev/nvidiactl \

--bind=/dev/nvidia-modeset \

--bind=/usr/bin/nvidia-bug-report.sh:/usr/bin/nvidia-bug-report.sh \

--bind=/usr/bin/nvidia-cuda-mps-control:/usr/bin/nvidia-cuda-mps-control \

--bind=/usr/bin/nvidia-cuda-mps-server:/usr/bin/nvidia-cuda-mps-server \

--bind=/usr/bin/nvidia-debugdump:/usr/bin/nvidia-debugdump \

--bind=/usr/bin/nvidia-modprobe:/usr/bin/nvidia-modprobe \

--bind=/usr/bin/nvidia-ngx-updater:/usr/bin/nvidia-ngx-updater \

--bind=/usr/bin/nvidia-persistenced:/usr/bin/nvidia-persistenced \

--bind=/usr/bin/nvidia-powerd:/usr/bin/nvidia-powerd \

--bind=/usr/bin/nvidia-sleep.sh:/usr/bin/nvidia-sleep.sh \

--bind=/usr/bin/nvidia-smi:/usr/bin/nvidia-smi \

--bind=/usr/bin/nvidia-xconfig:/usr/bin/nvidia-xconfig \

--bind=/usr/lib/gbm/nvidia-drm_gbm.so:/usr/lib/x86_64-linux-gnu/gbm/nvidia-drm_gbm.so \

--bind=/usr/lib/libEGL_nvidia.so:/usr/lib/x86_64-linux-gnu/libEGL_nvidia.so \

--bind=/usr/lib/libGLESv1_CM_nvidia.so:/usr/lib/x86_64-linux-gnu/libGLESv1_CM_nvidia.so \

--bind=/usr/lib/libGLESv2_nvidia.so:/usr/lib/x86_64-linux-gnu/libGLESv2_nvidia.so \

--bind=/usr/lib/libGLX_nvidia.so:/usr/lib/x86_64-linux-gnu/libGLX_nvidia.so \

--bind=/usr/lib/libcuda.so:/usr/lib/x86_64-linux-gnu/libcuda.so \

--bind=/usr/lib/libnvcuvid.so:/usr/lib/x86_64-linux-gnu/libnvcuvid.so \

--bind=/usr/lib/libnvidia-allocator.so:/usr/lib/x86_64-linux-gnu/libnvidia-allocator.so \

--bind=/usr/lib/libnvidia-cfg.so:/usr/lib/x86_64-linux-gnu/libnvidia-cfg.so \

--bind=/usr/lib/libnvidia-egl-gbm.so:/usr/lib/x86_64-linux-gnu/libnvidia-egl-gbm.so \

--bind=/usr/lib/libnvidia-eglcore.so:/usr/lib/x86_64-linux-gnu/libnvidia-eglcore.so \

--bind=/usr/lib/libnvidia-encode.so:/usr/lib/x86_64-linux-gnu/libnvidia-encode.so \

--bind=/usr/lib/libnvidia-fbc.so:/usr/lib/x86_64-linux-gnu/libnvidia-fbc.so \

--bind=/usr/lib/libnvidia-glcore.so:/usr/lib/x86_64-linux-gnu/libnvidia-glcore.so \

--bind=/usr/lib/libnvidia-glsi.so:/usr/lib/x86_64-linux-gnu/libnvidia-glsi.so \

--bind=/usr/lib/libnvidia-glvkspirv.so:/usr/lib/x86_64-linux-gnu/libnvidia-glvkspirv.so \

--bind=/usr/lib/libnvidia-ml.so:/usr/lib/x86_64-linux-gnu/libnvidia-ml.so \

--bind=/usr/lib/libnvidia-ngx.so:/usr/lib/x86_64-linux-gnu/libnvidia-ngx.so \

--bind=/usr/lib/libnvidia-opticalflow.so:/usr/lib/x86_64-linux-gnu/libnvidia-opticalflow.so \

--bind=/usr/lib/libnvidia-ptxjitcompiler.so:/usr/lib/x86_64-linux-gnu/libnvidia-ptxjitcompiler.so \

--bind=/usr/lib/libnvidia-rtcore.so:/usr/lib/x86_64-linux-gnu/libnvidia-rtcore.so \

--bind=/usr/lib/libnvidia-tls.so:/usr/lib/x86_64-linux-gnu/libnvidia-tls.so \

# not exist in my machine. --bind=/usr/lib/libnvidia-vulkan-producer.so:/usr/lib/x86_64-linux-gnu/libnvidia-vulkan-producer.so \

--bind=/usr/lib/libnvoptix.so:/usr/lib/x86_64-linux-gnu/libnvoptix.so \

--bind=/usr/lib/modprobe.d/nvidia-utils.conf:/usr/lib/x86_64-linux-gnu/modprobe.d/nvidia-utils.conf \

--bind=/usr/lib/nvidia/wine/_nvngx.dll:/usr/lib/x86_64-linux-gnu/nvidia/wine/_nvngx.dll \

--bind=/usr/lib/nvidia/wine/nvngx.dll:/usr/lib/x86_64-linux-gnu/nvidia/wine/nvngx.dll \

--bind=/usr/lib/nvidia/xorg/libglxserver_nvidia.so:/usr/lib/x86_64-linux-gnu/nvidia/xorg/libglxserver_nvidia.so \

--bind=/usr/lib/vdpau/libvdpau_nvidia.so:/usr/lib/x86_64-linux-gnu/vdpau/libvdpau_nvidia.so \

--bind=/usr/lib/xorg/modules/drivers/nvidia_drv.so:/usr/lib/x86_64-linux-gnu/xorg/modules/drivers/nvidia_drv.so \

--bind=/usr/share/X11/xorg.conf.d/10-nvidia-drm-outputclass.conf:/usr/share/X11/xorg.conf.d/10-nvidia-drm-outputclass.conf \

--bind=/usr/share/dbus-1/system.d/nvidia-dbus.conf:/usr/share/dbus-1/system.d/nvidia-dbus.conf \

--bind=/usr/share/egl/egl_external_platform.d/15_nvidia_gbm.json:/usr/share/egl/egl_external_platform.d/15_nvidia_gbm.json \

--bind=/usr/share/glvnd/egl_vendor.d/10_nvidia.json:/usr/share/glvnd/egl_vendor.d/10_nvidia.json \

--bind=/usr/share/licenses/nvidia-utils/LICENSE:/usr/share/licenses/nvidia-utils/LICENSE \

--bind=/usr/share/vulkan/icd.d/nvidia_icd.json:/usr/share/vulkan/icd.d/nvidia_icd.json \

--bind=/usr/share/vulkan/implicit_layer.d/nvidia_layers.json:/usr/share/vulkan/implicit_layer.d/nvidia_layers.json \

DeviceAllow=/dev/dri rw

DeviceAllow=/dev/shm rw

DeviceAllow=/dev/nvidia0 rw

DeviceAllow=/dev/nvidiactl rw

DeviceAllow=/dev/nvidia-modeset rwusage

sudo machinectl start jammy

sudo machinectl poweroff jammy

sudo machinectl enable jammy # auto-startinstall package

- update modify

/etc/apt/source.list, addjammy-updatesandjammy-backports

deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ jammy-updates main restricted universe multiverse

deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ jammy-backports main restricted universe multiversethen, sudo apt updatecuda

according to this, we just need to install the requiredcuda-toolkitin the container regardless the cuda in the host Arch.

following Nvidia’s instruction install the specific version ofcuda-toolkit.ros

just following the ROS wiki everything will work correctly.

GUI

Using Wayland, to use GUI, just let the DISPLAY env in the container equal to its value in the host without binding anything specifically.

at least it runs well on my machine

export DISPLAY=:1reference

https://wiki.archlinux.org/title/Systemd-nspawn

https://www.kxxt.dev/blog/systemd-nspawn-container-for-ros